Artificial Intelligence (AI) technologies are evolving at breakneck speed. Today's cutting-edge model may become obsolete tomorrow. This rapid evolution, while exciting, presents a challenge – how to leverage the current best AI capabilities without being tied to a single model or provider.

At InvGate, we apply a strategy that we call “agnostic AI” (as in platform agnostic). This approach allows us to adopt various AI models and providers, selecting the most suitable option for each task.

In this article, we will delve deeper into how we implement the agnostic AI perspective at InvGate, exploring the challenges of seamlessly switching between various AI models and providers. Plus, we will discuss the methodological aspects that guide us in selecting the most suitable choices for each task.

Read on to discover how we make the most of the agnostic AI approach and how it can help improve your AI adoption strategy.

Two ways to implement AI

Imagine being in a hypothetical situation akin to the famous trolley problem, where the trolley represents your business thundering along the tracks of technological progress, and you're at a juncture.

Down one set of tracks, there's the path of AI-as-a-Service (AIaaS) providers, offering convenience and access to state of the art AI models. Down the other track, there's the path of self-hosted AI models, providing control and customization.

You can't see what lies further down each track. The AIaaS route could potentially lead to dependency on the provider, or it might evolve into a perfect symbiosis with your business. The self-hosted path could lead to high upkeep costs, or it might turn out to be the best investment you ever made. Which track do you choose?

Fortunately, well-engineered software allows us to ride both tracks simultaneously. By constructing the appropriate abstractions, we can blend and switch between multiple AI providers and self-hosted models. It's less of a dilemma and more of an opportunity, giving us the flexibility to leverage the strengths of both worlds.

AI providers: The more the merrier

For some time, the big Cloud Service Providers have been competing in the AIaaS space, and it’s worth it to recap on the top three incumbents.

- Microsoft Azure allows training and deploying custom models, and also maintains a partnership with OpenAI offering access to its state of the art GPT Large Language Models (LLM).

- Google Cloud offers a unified platform called Vertex AI to streamline AI workflows, which recently included access to its new Gemini LLM.

- Amazon Web Services allows starting with prebuilt, ready-to-use services in the Bedrock platform, and to move to managed infrastructure tools like Amazon SageMaker for building, training, and deploying custom models.

However, recently we have seen an exponential growth in the number of AIaaS providers, with many smaller players entering the space. For instance, Modal, Replicate, and Groq – just to name a few – offer AIaaS focusing on different aspects: building custom models, optimizing inference of pre-trained models, and even designing and building their own chips for maximum efficiency.

The AI landscape is evolving rapidly, while at the same time there is an increasing demand for AI-powered products. The upside is that AI capabilities are constantly improving, and costs are dropping quickly, making AI ever more useful and accessible. Yet, the best choice of an AI provider today can quickly become obsolete. For this reason, we need to remain flexible, agnostic.

Agnostic AI: Thriving in the era of AI

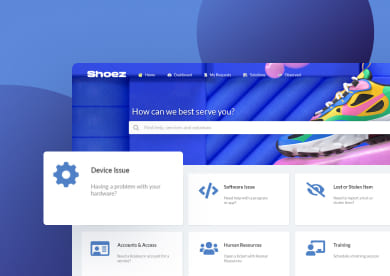

Agnostic AI is about keeping options open, leveraging the best that each AI provider offers without getting locked in. To implement it, three key elements are required: an interface, a router, and the actual providers and models.

The three key elements of agnostic AI

Interface

The first component to agnostic AI is the interface. Think of it as the entry point of the system, serving as a buffer between the internal workings of our AI system and the external requests it receives.

It's responsible for abstracting away the complex implementation details of the AI system, presenting a clean way for other parts of our software to interact with it. A well-designed interface ensures that changes to AI models or providers won't disrupt the rest of our system.

Router

Next, we have the router. Once a request comes through the interface, the router takes over. Its job is to analyze the request and decide where to send it. This decision is not trivial, it’s based on various factors such as the type of request, the capabilities of the available AI models, and the cost-effectiveness of using a particular model or provider.

The router plays a crucial role in ensuring that each request is handled most efficiently, guiding it to the provider or model best suited to fulfill the task.

Providers and models

The last piece of the puzzle are the providers and the AI models themselves. These are the entities that perform the actual AI tasks. In this module, we also handle specific access concerns, including but not limited to authentication, data pre-processing, and results formatting.

The providers and models can be a combination of AIaaS offerings and self-hosted solutions. AIaaS providers offer multiple benefits – including state-of-the-art models, scalability, and reduced maintenance.

On the other hand, self-hosted models offer greater control and customization. The choice between these options is not binary; a well-implemented agnostic AI system can seamlessly switch between them as needed.

How to choose AI providers and models

The choice of AI providers and models to include in our system is a crucial one. However, it’s not a static decision, but rather a dynamic one that is constantly reassessed and updated as the AI landscape evolves. This is where an automated evaluation benchmark comes into play.

When we mention an “evaluation benchmark”, we refer to a standard or point of reference against which AI models are compared and assessed.

For example, imagine we're testing AI models designed to summarize knowledge base articles. Our benchmark might involve feeding these models the same set of articles and comparing the summaries they produce. Factors such as the accuracy of the information, the readability of the text, and the speed of the summarization would all be considered in the evaluation.

But before we let our evaluation pipeline loose on performance comparisons, there's a crucial pre-screening stage we need to go through. This stage involves making sure our potential AI providers tick all the right boxes in terms of compliance, security, and regional availability.

Compliance and security: The non-negotiables

First off, we're looking for providers who have their compliance and security game on point. We can't compromise on these aspects. We need providers who can confidently say they meet all the standards, like SOC 2, which focuses on managing customer data based on five important principles: security, availability, processing integrity, confidentiality, and privacy.

And it's not just about having the right certifications. We want providers who take data security as seriously as we do. This means solid encryption practices, strict access controls, and a well-prepared incident response plan. If a provider can't convince us they've got these areas covered, it's a deal-breaker, no matter how attractive their capabilities or pricing might be.

Regional availability: The world is our stage

Next, we need to consider if the provider is ready to perform on a global stage. We're not just talking about having servers in the right places. We also need to ensure they're up to speed with regional data laws and regulations. For example, if we're catering to clients in the European Union, we need to be confident that our provider is well-versed with the General Data Protection Regulation (GDPR).

So, before we start performance comparisons with our automated evaluation pipeline, we make sure we're only dealing with providers who pass this essential pre-screening stage. It's our way of ensuring our AI system is not just high-performing, but also trustworthy and globally ready.

Automated evaluation benchmark

Now that we have a shortlist of AI providers, it’s time to build and automate the evaluation benchmark. This is essentially a testing suite for AI providers and models.

Every time a new model is released, or an existing model gets an update, the benchmark reruns its suite of tests to evaluate the performance of the new or updated artifact. This process allows us to determine whether our current choice remains the best, or whether we should update the router module to reflect a new top performer.

The evaluation benchmark is not just about testing accuracy, but also efficiency and cost. It measures how quickly the models can process requests, ensuring that the response times are within the acceptable limits for our workloads. It also considers the financial aspect, calculating the cost of using each model to ensure that it fits within selected budgets.

Accommodating model specifics

It's important to note that AI models are not identical. Each model may have different input requirements, functionality, and performance characteristics. For example LLMs (like GPT and Gemini) can summarize text in many different languages using a single prompt. However, this same task can be achieved by using multiple specialized language-specific summarization models, often not requiring a prompt.

The evaluation pipeline needs to account for these specifics, and tailor its tests to accurately assess the strengths and weaknesses of each model. In this example, we need to be able to either evaluate single models on multiple prompts, as well as multiple models with no prompts at all.

The evaluation pipeline needs to be as adaptable and versatile as the system it's testing. This methodological approach to choosing AI providers and models is a cornerstone of a successful agnostic AI deployment. It's about making decisions based on empirical evidence and objective testing. It's about staying flexible and open-minded, ready to adopt new solutions as they prove their worth.

Final thoughts

Agnostic AI is a strategy that encourages flexibility and adaptability, allowing us to harness the full potential of the AI landscape.

The key to implementing agnostic AI lies in the combination of a systematized evaluation pipeline and a flexible design that encapsulates different AI models and providers. The evaluation pipeline verifies that our selections are always grounded in tangible evidence and unbiased testing, while the flexible design allows us to seamlessly switch between AI models and providers.

Implementing agnostic AI does require some additional upfront work. However, this is a small cost to bear considering the long-term benefits. As anyone in software development can attest, changing system design decisions later on can be incredibly hard. By investing in agnostic AI from the outset, we can avoid such costs and ensure that our system is always ready to adapt.

In conclusion, while agnostic AI might require some initial effort, its benefits far outweigh the costs. It offers a future-proof approach to AI, ensuring that our systems are always at the cutting edge, ready to leverage the latest and greatest that the AI world has to offer.

As we continue to explore the vast potential of AI, staying agnostic helps us remain adaptable, flexible, and ready for anything the future might bring.