You’ve probably heard about neural networks being hailed as the next big step in technological advancements in artificial intelligence (AI). Beyond its often exaggerated depiction in fiction and media, neural networks have slowly but steadily become an invaluable asset in the IT world. It is under constant research in data science and computer science.

We have already talked about artificial intelligence as machine learning, so now it’s the turn for neural networks and deep learning algorithms. In this opportunity, we’ll look at what neural networks are, how they work, and most importantly, how all their functionalities can be applied to ITSM.

Now, let’s dive deeper into the world of neural networks.

What exactly is a Neural Network?

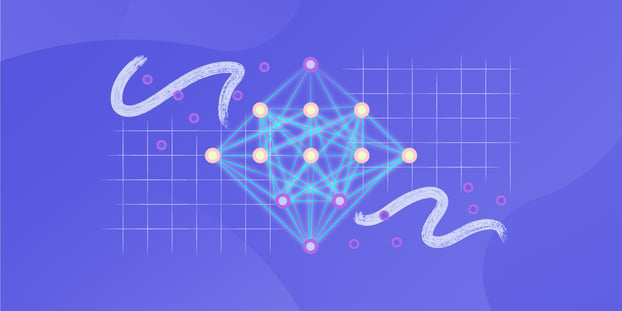

First, we need to understand the concept of neural networks: a neural network is a self-learning algorithm. We can also define it as a computational learning system that uses a woven network of functions. These functions interpret, understand, and translate data input of a particular type into a desired output, often in a different, easier-to-interpret form.

The concept of neural networks is inspired by how human brains work. They seek to emulate how neurons of the human brain function collectively to understand inputs from human senses and translate them into data, thus creating a sort of art.

It’s important to understand that neural networks are just one of many tools used in the broader world of machine learning algorithms. Neural networks might be a moving part of different machine learning and deep learning algorithms used to process complex data inputs into a space that computers can understand.

We use neural networks to solve real-life problems. Some include speech and image recognition, spam email filtering, finance, business analytics, and medical diagnosis. We will explore these applications as we learn how they work.

How does a Neural Network work?

An artificial neural network often involves many processors working in tiers. The first tier receives the basic input information like the optic nerves would receive images. Each successive level gets the output of the tier before it, not the raw input, just like our neurons further from the optic nerve. Finally, the last tier produces the output based on the raw data fed through the network.

Each tier has its processing nodes. These nodes have a small sphere of knowledge, including what they see, plus all the rules they were programmed to follow.

The previous tiers are deeply interconnected, which means that each node in level “X,” for instance, will be connected to many nodes in the tiers “X-1” and their inputs, just like tier “X+1” (the one after tier “X”) will provide input data for the following nodes in line. When the data reaches the output layer, it’s crucial to know that there may be one or multiple nodes from which the answer it produces can be read.

One of the most important parts of a neural network and its nodes is the activation function. The activation function of a node defines the output of that node given an input or set of information. For instance, a standard integrated circuit can be a digital network of activation functions that can be "ON" (1) or "OFF" (0), depending on the input.

Experts celebrate artificial neural networks for their ability to be adaptive, which means they modify themselves as they continually learn from initial training, and subsequent runs provide even more information about the world. The most basic learning model revolves around weighing the input streams (how each node weights the importance of input data from each preceding node) and then considering those inputs that contributed to getting the correct answer higher.

How does it train itself?

This question is at the heart of this article, and although it might seem difficult to grasp at first, we’ll get to it through examples.

Usually, an artificial neural network’s initial training involves being fed large amounts of data. In its most basic form, this training provides input and tells the network what the desired output should be. For instance, if we wanted to build a network that identifies bird species, the initial training could be a series of pictures, including birds, animals that aren’t birds, planes, and flying objects.

Each input would be accompanied by a matching identification such as the bird’s name or “not bird” or “not animal” information. The answers should allow the model to adjust its internal weightings to learn how to get the bird species right as accurately as possible.

For example, if nodes Alpha, Beta, and Gamma tell node Delta that the current image input is a picture of a hawk, but node Epsilon says it’s a condor. The training program confirms it is, in fact, a hawk. Then node Delta will decrease the weight it assigns to Epsilon’s input and increase the importance it gives to the Alpha, Beta, and Gamma information.

When defining the rules and making determinations (that is, the decision of each node on what to send to the next tier based on the inputs from the previous tier), neural networks use many principles. Some include gradient-based training, fuzzy logic, genetic algorithms, and Bayesian methods. They might be given some basic rules about object relationships in the modeled data.

3 types of neural networks

Neural networks are often divided into 3 distinct types:

1. Feed-forward neural networks

They are one of the most common and straightforward variants of neural networks. In a nutshell, feed-forward neural networks pass information in just one direction through various input nodes (in the input layer) until it makes it to the output node (in the output layer).

These networks may or may not have hidden layers, allowing them to be more interpretable. This neural network computational model works for facial recognition and computer vision technologies.

2. Recurrent neural networks

These neural networks are inherently more complex. They save the output of processing nodes and feed the results into the model. This is how this model learns to predict the outcome of a layer. Each node in the recurrent neural network serves as a memory cell, continuing computation and implementation of operations. These models fall under the deep learning algorithm category and often contain many hidden layers.

In addition, this type of neural network shares something with feed-forward neural networks in that both start with the same front propagation. Still, recurrent neural networks remember all processed information to reuse later.

If the network’s predictions are incorrect, the system self-learns and keeps working towards the correct prediction during backpropagation. This type of neural network is often used in text-to-speech conversion software.

3. Convolutional neural networks

They are one of the most popular models nowadays. This neural network model uses a variation of multilayer perceptrons (a simple model of a biological neuron in an artificial neural network) and contains one or more convolutional layers that can be connected entirely or simply pooled together.

Convolutional layers create feature maps that record a region of a much bigger image which is ultimately broken into rectangles. Their networks are prevalent in image recognition in most advanced artificial intelligence applications.

These would be facial recognition, text digitalization, and natural language processing. Other popular uses include paraphrase detection, signal processing, and image classification.

Neural networks vs. deep learning

Deep learning and neural networks tend to be used interchangeably in conversation, which is confusing. As a result, it’s worth noting that the “deep” in deep learning refers to the depth of layers in a neural network. Some neural networks are also often called deep neural networks.

Thus, a neural network consisting of more than three layers (including the inputs and the output) can be considered a deep learning algorithm. A neural network with only two or three layers is a primary neural network. It’s essential to remember this when referring to the two, as this is a common nomenclatural mistake.

How are Neural Networks being used today?

Neural networks are applied to a broad range of problems and can assess many different types of input, including images, videos, files, databases, and more. They also do not require explicit programming to interpret the content of those inputs.

Because of the generalized approach to problem-solving that neural networks offer, there is virtually no limit to the areas in which this technique can be applied. Some common applications of neural networks today include image/pattern recognition, self-driving vehicle trajectory prediction, facial recognition, data mining, email spam filtering, medical diagnosis, and cancer research. There are many more ways to use that neural nets today, and adoption is increasing rapidly.

How can neural networks be used in ITSM?

As we have explained above, there are loads of ways in which neural networks could be applied to the IT world. When talking about ITSM, though, perhaps the ideas that first spring to mind is efficiency and better user experience. Since neural networks have features such as self-training models, ticketing systems, and their respective change priority and change category features, users could benefit from an artificial intelligence helping hand to make this process easier for agents.

That is where InvGate Support Assist comes into play. With AI algorithmic technology, Support Assist is one of the market's leading AI engines for help desks. Its main goal is to speed up first response times significantly while improving the accuracy of the data handled for better routing and reporting.

The bottom line

In this piece, we’ve learned that neural networks are essentially systems or hardware designed to mimic how human neurons work. We’ve also analyzed how they work and found out that they work in layers. The input layer receives the data, which is then fed into subsequent nodes (often called hidden layers) and presented as a result in the output layer nodes.

We have also unveiled the mystery behind how they train. We concluded that these nodes constantly change to match desired outputs through three types of computational models with different approaches and applications. We’ve differentiated deep learning and how it relates to neural networks.

As a closing remark, we’d like to say that though they might seem daunting initially, neural networks have been and continue to be extremely useful in the current ITSM landscape. Their capabilities and uses for service desks continue to unveil daily.

In this sense, companies looking to leap into the new generation of ITSM services would be wise to start researching what the fuzz is all about. Just don’t worry about them taking over the world; having them change the world for the better sounds like a more positive and likely outcome after all!

Frequently asked questions

What are the 3 different types of neural networks?

The three types of neural networks are feed-forward neural networks, recurrent neural networks, and convolutional neural networks.

What are the 3 components of the neural network?

The three components of neural networks are:

- The input layer

- The processing layer

- The output layer

What are the main problems with neural networks?

- The lack of rules for determining the proper network structure means the appropriate artificial neural network architecture can only be found through trial and error and experience.

- The requirement of processors with parallel processing abilities makes neural networks hardware-dependent, which t is especially for neural networks with deep learning algorithms.

- The network works with numerical information. Therefore, all problems translate into numerical values before showing to the neural network.

- One of the most significant disadvantages of neural networks is the lack of explanation behind probing solutions.

- The inability to explain the why or how behind the solution generates a lack of trust in the network.

What are the 4 features of a neural network?

The four features or layers of a neural network are:

- Fully connected layer

- Convolution layer

- Deconvolution layer

- Recurrent layer